While working on soon-to-be-released projects there has often been a need to make a staging/testing website publicly accessible for client testing. This is a slippery slope if search engine spiders get in and index your site before the rest of the world is meant to see it (it happens more than you’d like to think) – If it happens to be a website you are building for something that the rest of the world shouldn’t see yet such as a product/service launch, having it leak too early can often make or break you. They have a word that describes this very fear of spiders – it’s called Arachnophobia.

Arachnophobia is a library designed to address this very concern. It contains a .Net HttpModule and HttpHandler that tells visiting search engine spiders that you don’t want your site indexed.

Arachnophobia is a library designed to address this very concern. It contains a .Net HttpModule and HttpHandler that tells visiting search engine spiders that you don’t want your site indexed.

But I didn’t link to my site anywhere?

When you really need search engines to index you they’re nowhere to be found – other times they poke their nose in when you really wish they wouldn’t.

One of the sometimes strange problems that developers come unstuck with when it comes to being indexed too early is that they assume that because they haven’t linked to their site anywhere that search engines won’t index your site.

The main reason they face this issue is that sites that list registration and de-registration of domain names often link to new or changed domains. This means that if Google comes along to one of these sites and finds a link to your new domain name www.super-cool-unicorns.com it will come for a visit without a second thought.

What is Arachnophobia?

Arachnophobia is two things rolled into one. I have tried to make installation a frictionless exercise so that you can get onto more important things in your day.

An ASP.Net HttpHandler that implements a robots.txt file with a disallow all telling spiders to stay away.

User-agent: * Disallow: /

This means that when a search engine spider comes to visit your site, if they aren’t evil spiders and abide by the “non-standardised” standard for robots.txt (there is no actual standards body for this, as it was simply agreed in June 1994 by members of the robots mailing list), they will attempt to download the file /robots.txt from the root of you

An ASP.Net HttpModule that adds the Google/Yahoo X-Robots-Tag header to every asset/page request.

HTTP/1.1 200 OK Date: Tue, 25 May 2010 21:42:43 GMT (…) X-Robots-Tag: noindex (…)

What it is not

Let’s be clear – this is a beta project so if it eats your babies, I take no responsibility. This carries the “works on my machine” badge, so Buyer beware…

Arachnophobia doesn’t actually stop spiders from indexing your site – your site will still be accessible in a web browser for any passer-by to see. Relying on this “security through obscurity” approach should not be considered best practice if you want to hide your site.

What Arachnophobia does do however, is easily setup a robots.txt file for your site and also add an http header to each page request telling spiders “please don’t index my site” – this is a passive approach though and will only work for the main search engines such as Yahoo, Bing, Google etc. There are still plenty of bad web spiders crawling the web that disobey this form of “request” to not index.

For mission critical web applications that you want to show to a client you should at the very least setup your webserver to only serve requests to a white list of IP addresses (i.e. you and your client’s IP addresses) or hide the site behind some form of authentication.

Why do you care?

You may be left thinking that you could easily add a robots.txt and the specific header to IIS manually easily, so what is the point?

The idea behind Arachnophobia is threefold:

- When you need to add robots.txt and http headers nearly instantly using Nuget (and then take them away just as easily).

- When you want to apply these features to a whole server at once (the binary is signed and can be added to the GAC and i have a setup project in the works to make this even easier).

- In future i will be adding more features that will extend this functionality (such as hard blocking based on user agents etc).

Where can I get it

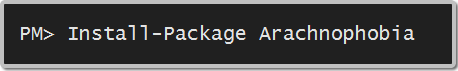

Add it to your ASP.Net website using the NuGet package manager:

Or download from NuGet here.

Download the Source Code from GitHub

Download the Binaries from GitHub

Installation

If you download the Binaries and want to install it yourself manually you simply need to follow the bouncing ball:

1. Reference the “Arachnophobia.IIS.dll” Binary in your ASP.net website

2. Add the Http Handler and Http Module sections to your web.config

<system.web> <httpModules> <add name="AracnophobiaHeaderModule" type="Arachnophobia.IIS.RobotHeaderModule" /> </httpModules> <httpHandlers> <add path="robots.txt" verb="*" type="Arachnophobia.IIS.RobotsTxtHandler" /> </httpHandlers> </system.web> <system.webServer> <validation validateIntegratedModeConfiguration="false" /> <modules runAllManagedModulesForAllRequests="true"> <add name="AracnophobiaHeaderModule" type="Arachnophobia.IIS.RobotHeaderModule" preCondition="integratedMode" /> </modules> <handlers> <add name="AracnophobiaRobotsTxt" path="robots.txt" verb="*" type="Arachnophobia.IIS.RobotsTxtHandler" preCondition="integratedMode" /> </handlers> </system.webServer>