Sometimes your ASP.Net sites crash or hang, and you have no idea why. No exceptions, no event logs. This leaves you a little light on places to start your debug investigation journey. Microsoft Developers who live a little closer to the metal (i.e. non web developers) will know of tools like WinDbg, but for web developers these tools can seem a little scary/low level. This post is aimed at allowing you to get a closer view on your ASP.Net website at the time of a failure, without any of complexity of learning WinDbg on your own.

Consider a scenario: A .Net web app you’ve just developed has gone live. As far as post-deployment worst case scenarios go they usually fit under the following in descending order of emergency’ness (technical term):

Consider a scenario: A .Net web app you’ve just developed has gone live. As far as post-deployment worst case scenarios go they usually fit under the following in descending order of emergency’ness (technical term):

- Website crashes and stays down. Users can’t see the site at all.

- A crucial part of the website fails (checkout process?).

- A crucial part of the website fails intermittently with some user sessions stuck in a hung state.

- The website crashes intermittently and is temporarily fixed by an app pool restart.

The first two are pretty big red flags – usually so big that I could scold you for having lazy QA practices, but luckily for both of us you and your team have a deployment plan that includes procedures for initiating a rollback to that backup you took before doing your deployment (you do have a rollback plan, don’t you…?).

The second two are less frequent, but can be really hard to debug. Especially if you are unable to replicate the issues in a staging or UAT environment. This is the worst case debugging scenario, and is often where many developers simply turn away from a problem, rollback and be on their merry way. Although it’s not as common, these types of issues sometimes fall into the basket of “that product simply doesn’t work” or “version 1.3 has a major bug, don’t use it”. It doesn’t have to be that way.

At their core these types of production crashes often fall into a few categories:

- 3rd party system or framework failures.

- Memory leaks.

- Threading issues (race conditions or locking issues only seen under load).

As someone who’s developed websites on the Microsoft stack for many years, these types of issues appear to come up about once every 2 years for me. Not common enough that you need to know how to troubleshoot them to save the day (we’ve got backups and a rollback plan, remember?), but annoying enough that I wanted to figure out how to debug these scenarios.

As a .Net developer who is also happy to admit I’m not a “investigate memory dumps” kind of guy, this meant I had to learn the hard way.

This blog post is aimed at developers who, like me, don’t often develop in zeros and ones; but need a guide on how to wield suck magical powers.

Things you’ll need

Before continuing you’ll need to install some tools on your local workstation and the remote server you’re planning to debug.

- Admin access to your IIS server so we can take memory dumps and copy framework Dlls

- Debugging Tools for Windows (WinDbg comes as part of this). Install this on both your workstation and the server.

A bit of info about WinDbg

WinDbg is a multipurpose debugger for Windows. It can be used to debug mode applications, drivers, and the Windows operating system itself in kernel mode.

As well as being used for live debugging scenarios it can be used to investigate and query memory dumps taken within Windows – whether it be from a bluescreen of death, a task manager “mini-dump” or a full memory dump taken using the collector tool it comes with (AdPlus).

This all sounds scary for the un-initiated, but if you take the time to put it to use, it give you the impression that you’ve unlocked the final frontier of debugging. You are literally able to get a view on objects, memory and application methods in flight for anything running on your machine.

Setting up a scenario if you don’t have a production issue to walk through

If you’re reading this post and want to follow along, I’ve actually created a test website you can run to generate yourself some of these issues for debugging.

I’ll be walking through the crash dumps generated by this site to make it easier to understand. In simple terms, this site has three links on the home page, which is run will generate different types of exceptions.

Download it from here.

First things first – Collecting the data

So if your application has reached the point where you’re looking up investigating memory dumps, you’re often in need of help.

Investigating the Application and System event logs may being showing you nothing, your application needs an App Pool restart every now and then just to keep your website online – and you’re generally unsure why.

You’re looking for the first crumb, so you can follow the trail to your application and it’s instability can be further investigated.

You’re at the point where what you really need to answer are a few things:

- What is the current state of your application – what are each of the threads doing?

- What are some of the recent exceptions thrown by your application? Maybe they occurred on a background thread, so you never knew about them?

- What requests are in progress to your .Net site at the time of a crash – evil doers could be bringing your site down.

The first thing you need to do is download the Windows Debugging tools to the server and install them.

Once your application is showing it’s symptoms, we need to take a memory dump of the IIS worker process running your application.

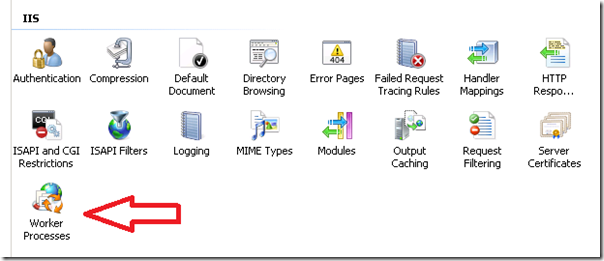

To do this, first find the process id for your website’s application pool. You can do this by opening IIS Manager and clicking on the “Worker Processes” link.

This will show you a list of all the application pool worker processes and their process Id’s.

Take note of the process ID for the worker process that is running your application pool.

Now open up an elevated command prompt on your target server.

Navigate to the folder C:\Program Files (x86)\Windows Kits\8.1\Debuggers\x64.

Then type the following:

adplus.exe –p [processId] –hang –o C:\FolderToStoreMemoryDumpIn

AdPlus supports two modes –hang for hung processes, and –crash.

When running in crash mode, AdPlus attaches a debugger to your process for the lifetime of that process. To manually detach the debugger from the process you must press CTRL+C to break the debugger. This is great if you know what to do to reproduce the problem as you can replicate it to cause the crash while AdPlus is connected.

In my experience usually these issues can be picked up from event viewer or logs for .net managed crashed - for me the only thing that draws me towards memory dumps at all are the problems where you don’t know.

As the scenario I’m walking you through involves investigating a crashed worker process after it has become unstable, we’ll be using the –hang option. This simply takes a memory dump of the entire worker process for investigation.

Taking a memory dump of your process takes time – for a large web app that is using many GBs of cache etc., it might take a few minutes depending on resources available on the box at the time. I suggest taking a few dumps a minute of two apart as this can be handy to compare threads and see whether waiting threads have moved on in their execution between dumps.

Next up we need to collect some more evidence from the scene of the crime. This is critical to guarantee we can properly inspect the dumps later – if you forget to capture these files files, and your local environment is running a slightly different version of the .Net binaries, you’ll run into trouble later.

Take a copy of the following files on the destination server for us to bring back locally. Select the correct x86/x64 framework folder depending on what your app pool was running as – same goes for framework folder.

C:\Windows\Microsoft.NET\[Framework or Framework64]\[Framework Version #]\SOS.dll C:\Windows\Microsoft.NET\[Framework or Framework64]\[Framework Version #]\clr.dll C:\Windows\Microsoft.NET\[Framework or Framework64]\[Framework Version #]\mscordacwks.dll

Now navigate to your web applications folder and copy all of the .PDB symbol files from the bin folder for the current build of your application. If these aren’t on your remote server, but you’ve kept a copy of them in symbol server or similar instead, you can skip that part as long as you have access to these files and can copy them locally.

Take your PDB’s, your framework files and the dumps and copy them home onto your local workstation.

Setting up your local workstation environment

Now that you’ve copied your memory dumps and symbols files locally we need to get your local environment setup.

Make sure you’ve installed WinDbg (part of the Debugging Tools for Windows).

Now create two folders on your location workstation:

- A folder to store your personal memory dumps and PDB symbol files (I’m storing mine under D:\MemoryDumps).

- A folder to store cached copies of Microsoft PDB’s (I’m storing mine under D:\Symbols).

Now open up your System settings and then Advanced System settings.

We’re going to add a new environment variable, so open the Environment Variables modal.

Create a new system environment variable called “_NT_SYMBOL_PATH”.

Enter the following value:

SRV*D:\Symbols*http://msdl.microsoft.com/download/symbols;D:\MemoryDumps

Note the format of this:

SRC*[LOCATION TO STORE CACHE]*[PATH TO SYMBOLS SEPARATED BY SEMI-COLONS]

This basically translates my environment variable to meaning:

- Store my symbol cache in D:\Symbols

- Use Microsoft’s symbol store as a source (http://msdl.microsoft.com/download/symbols).

- Use my local folder D:\MemoryDumps as a another symbol source.

Click OK twice and you’re done. By Setting this environment variable you are letting WinDbg know where to download missing symbol files from when loading the stack traces for your memory dumps – from Microsoft’s servers for framework references, and from your local folder for you application’s PDB’s.

Investigating your Memory Dump locally

Now that you’ve set up your symbols paths and installed WinDbg it’s time to actually load your memory dumps into WinDbg.

Copy the following files into the folder you created for your memory dumps (I called mine D:\MemoryDumps).

- Your server’s SOS.dll

- Your server’s CLR.dll

- Your server’s msdacwrks.dll

- Your applications PDB files.

- Your memory dump.

Open up WinDbg (32bit or 64bit depending on the process your dump is from) and select File > Open Crash Dump.

Surf to your memory dump and open it.

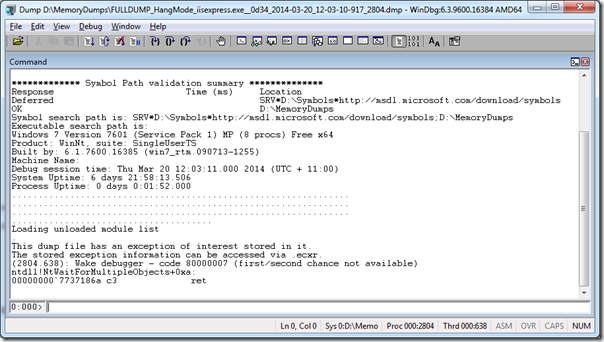

You should be greeted with a screen that looks similar to this. The messages may differ depending on the crashed state of your memory dump. Mine mentions that “there is an exception of interest”, but you might not be so lucky.

The first things we want to do is load up your symbols, and load up the Microsoft CLR and SOS dlls.

To do this simply type the following commands into the WinDbg window one after another:

.symfix .reload .loadby sos clr

What this will do is load your symbol files from the paths we set in our environment variable.

Now we’re in, there are a bunch of commands you can run to query your memory dump to investigate different issues. I’ve categorised a few of these below.

NOTE:

WinDbg is a pretty hardcore piece of debugging software. It’s output can be very verbose and hard to decipher if you don’t have a huge amount of experience reading stack traces and thread dumps. That being said, if you take the time to read it’s output thoroughly, the information it delivers is bar none, rocket fuel for a debugging problem.

Don’t give up the second it looks hard to decipher. You’ve never seen it before, so of course it looks strange – that’s the whole purpose of this post.

Viewing the current stack track of each thread

This is by far the most powerful way to first take a look at what is going on – we can query the memory dump to see literally what method each thread is running at the time the memory dump was taken.

~*e !clrstack

This returns the stack trace of each thread. The output may be really long depending on how busy your app was (and therefore how many threads were running) when the dump was taken and you’ll have to do a lot of scrolling to move through all of your active threads. If you want to read more about deciphering StackTrace’s take a look a this blog post. In simple terms, the top line of each StackTrace is the method in your application that the thread is running.

My example stack trace below shows the controller action from my sample project hitting my call to Thread.Sleep().

OS Thread Id: 0x14b4 (42)

Child SP IP Call Site

000000001735cfe8 00000000773715fa [HelperMethodFrame: 000000001735cfe8] System.Threading.Thread.SleepInternal(Int32)

000000001735d0d0 000007fe870714d6 System.Threading.Thread.Sleep(Int32)

AspNetCrash.Web.Controllers.HomeController.sleep() [d:\Code\AspNetCrash.Web\AspNetCrash.Web\Controllers\HomeController.cs @ 35]

000000001735d180 000007fe86b52c41 DynamicClass.lambda_method(System.Runtime.CompilerServices.Closure, System.Web.Mvc.ControllerBase, System.Object[])

System.Web.Mvc.ActionMethodDispatcher.Execute(System.Web.Mvc.ControllerBase, System.Object[])

Getting a list of threads that have exceptions

Another function of WinDb is being able to get a list of all the current threads that are in an exception state. You can use this to troubleshoot if a part of your application has crashed with an exception – if this happened on a background thread you may have never known about it. Before taking this memory dump I accessed by “Generate StackOverflow” page in my sample site to get it to through a StackoverflowException.

!threads

This returns all threads and on the right is their current state, as well as describing if there are any “dead” threads:

Notice one thread in the above dump showing that it has reached the StackOverflow Exception my sample site threw.

In your case this could be any type of exception, and if you wanted to find out more about it, you can actually then dig deeper into this exception and read the message attached to it, but taking the memory pointer mentioned on the far right hand side of the StackOverflow exception and then entering it like so:

!pe 000000033f621188

This will return the actual object:

Exception object: 000000033f621188 Exception type: System.StackOverflowException Message:InnerException: StackTrace (generated): StackTraceString: HResult: 800703e9 The current thread is unmanaged

Figuring our if thread are hung or waiting

When you’re wanting to see if a thread is in a hung or waiting state, you can query using the following command:

!runaway

This will return a list of all the threads and the amount of CPU time that they have consumed.

0:000> !runaway 1 User Mode Time Thread Time 6:2a38 0 days 0:00:14.789 46:29d8 0 days 0:00:00.421 43:2128 0 days 0:00:00.062 41:25f8 0 days 0:00:00.062 25:25b0 0 days 0:00:00.046 44:1650 0 days 0:00:00.031 48:1524 0 days 0:00:00.015 47:12f0 0 days 0:00:00.015 42:14b4 0 days 0:00:00.015 0:638 0 days 0:00:00.015 49:21e0 0 days 0:00:00.000 45:25c0 0 days 0:00:00.000 40:268c 0 days 0:00:00.000 39:29f0 0 days 0:00:00.000 38:1d10 0 days 0:00:00.000 37:10e0 0 days 0:00:00.000 36:15e8 0 days 0:00:00.000 35:280c 0 days 0:00:00.000 34:29f4 0 days 0:00:00.000 33:24c8 0 days 0:00:00.000

This shows that thread 6 has spent 14.789 seconds of CPU time.

This is where my recommendation to take multiple dumps comes in. If you have taken two dumps seconds apart, you can compare these values.

If the user mode thread time for a certain thread in your second dump, it must be stuck in a waiting state.

We can then correlate this directly the the results of you ~*e !clrstack result. Just look for the thread id to see what it is waiting on.

OS Thread Id: 0x2a38 (6) <— This is thread “6” from the above !runaway command

Child SP IP Call Site

000000001735cfe8 00000000773715fa [HelperMethodFrame: 000000001735cfe8] System.Threading.Thread.SleepInternal(Int32)

000000001735d0d0 000007fe870714d6 System.Threading.Thread.Sleep(Int32)

000000001735d100 000007fe870a0e73 AspNetCrash.Web.Controllers.HomeController.sleep() [d:\Code\AspNetCrash.Web\AspNetCrash.Web\Controllers\HomeController.cs @ 35]

000000001735d180 000007fe86b52c41 DynamicClass.lambda_method(System.Runtime.CompilerServices.Closure, System.Web.Mvc.ControllerBase, System.Object[])

000000001735d1c0 000007fe85104dd1 System.Web.Mvc.ActionMethodDispatcher.Execute(System.Web.Mvc.ControllerBase, System.Object[])

Investigating memory leaks

In order to get a view on memory, we need to use the following command

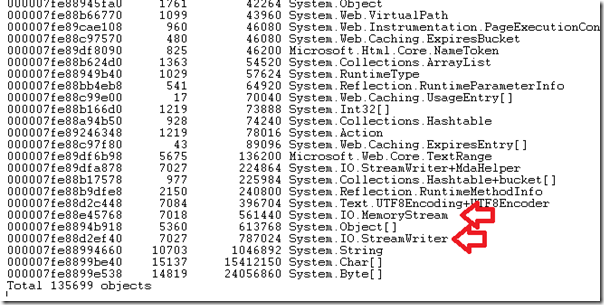

!dumpheap

This will give us an overwhelming amount of information, but what we’re looking at is, from left to right, the method table address, the number of instances of the type, the amount of memory being used in total by all instances of a type in bytes and finally the type. I’ve highlighted the two important ones here, the number of objects for a given type and the amount of memory taken up. The list sorted by what is taking up the most memory.

Looking at the above list I've highlighted two types on the heap – both of these are IO based objects. They could be anything that we know shouldn’t be taking up a huge amount of space, but in my example site I use memory streams without disposing of them – in general you shouldn’t really have too many of these objects lying around, because you should be disposing of them quite rapidly – in this case there are over 7000 instances of MemoryStream.

If we want to drill down into these objects we can query again with the System.IO.MemoryStream type as a filter.

!dumpheap -type System.IO.MemoryStream

This will then list all of the objects on the heap that are of type MemoryStream.

Lots more information flies past you, but they are sorted by generation. The objects at the bottom of the list are the oldest, so are more likely to be objects that have leaked from within your application as they have survived a number of garbage collection life times.

The information is listed left to right in columns as Memory address, the Method Table address (for reflection lookup), and Size.

Assuming again that the last items on the list are more likely to be our leaked objects, if we look them up we can get their method handles to see where in your code the leak occurred.

Before we do this though, you can actually inspect the object by taking it’s address (the far left number) and typing:

!do [address]

This will show allow you to inspect the object. You can then use the addresses of it’s properties to inspect their values. If this was a SQL connection for example I could !do into the sql connection, and then !da into the address of it’s ConnectionString field (listed as it’s value) for example.

My boring example of my MemoryStream object with all of it’s fields and values.

!do 00000004c0106e78

Name: System.IO.MemoryStream

MethodTable: 000007fe884d5768

EEClass: 000007fe884f6be8

Size: 80(0x50) bytes

File: C:\WINDOWS\Microsoft.Net\assembly\GAC_64\mscorlib\v4.0_4.0.0.0__b77a5c561934e089\mscorlib.dll

Fields:

MT Field Offset Type VT Attr Value Name

000007fe87fd5fa0 4000199 8 System.Object 0 instance 0000000000000000 __identity

0000000000000000 400200e 10 ...eam+ReadWriteTask 0 instance 0000000000000000 _activeReadWriteTask

0000000000000000 400200f 18 ...ing.SemaphoreSlim 0 instance 0000000000000000 _asyncActiveSemaphore

000007fe8802e538 40020ff 20 System.Byte[] 0 instance 000000043ff49370 _buffer

000007fe87fdf5e0 4002100 30 System.Int32 1 instance 0 _origin

000007fe87fdf5e0 4002101 34 System.Int32 1 instance 225 _position

000007fe87fdf5e0 4002102 38 System.Int32 1 instance 225 _length

000007fe87fdf5e0 4002103 3c System.Int32 1 instance 256 _capacity

000007fe87fdc510 4002104 40 System.Boolean 1 instance 1 _expandable

000007fe87fdc510 4002105 41 System.Boolean 1 instance 1 _writable

000007fe87fdc510 4002106 42 System.Boolean 1 instance 1 _exposable

000007fe87fdc510 4002107 43 System.Boolean 1 instance 1 _isOpen

000007fe89482c58 4002108 28 ...Int32, mscorlib]] 0 instance 0000000000000000 _lastReadTask

The fields that have values, like _isOpen and _length have their values actually shown in the above table. For child fields however I can view the actual values of the _buffer field object by typing it’s memory address instead (listed as it’s value) !da 000000043ff49370 . As this is a boring example of a memory stream it will simply output the streams contents which although looking impossible to understand, in reality this is a memory stream, so isn’t really meant to be human readable. The point is you can pull out

[230] 000000043ff49466 [231] 000000043ff49467 [232] 000000043ff49468 [233] 000000043ff49469 [237] 000000043ff4946d ... [252] 000000043ff4947c [253] 000000043ff4947d [254] 000000043ff4947e [255] 000000043ff4947f

Once you have an object from typing !do [object address] you can actually take a look at the methods in your application that are holding a reference to that object by using the following command.

!gcroot [address to object]

This will show you the reference stack of an object allowing you to then see where your memory leak is coming from.

An example of what this looks like:

!gcroot 00000004c0106e78

Note: Roots found on stacks may be false positives. Run "!help gcroot" for

more info.

Scan Thread 10 OSTHread 7ec

Scan Thread 11 OSTHread e44

Scan Thread 12 OSTHread e04

DOMAIN(003CB450):HANDLE(Pinned):2f512f8:Root: 04fe78e0(System.Object[])->

03fef5bc(ApsNetCrash.Web.Controllers.HomeController.memoryleak)->

03ff0710(ApsNetCrash.Web.Controllers.HomeController)->

The above shows the source of my memory leak! We can see it is from within my method ApsNetCrash.Web.Controllers.HomeController.memoryleak.

Errors you may stumble across along your journey

If at any stage of running the commands above you haven’t copied your clr.dll, sos.dll or mscordacwrks.dll from your production environment into the same folder as your memory dump, you’ll be met with a nasty error message like this:

Failed to load data access DLL, 0x80004005

Verify that 1) you have a recent build of the debugger (6.2.14 or newer)

2) the file mscordacwks.dll that matches your version of mscorwks.dll is

in the version directory

3) or, if you are debugging a dump file, verify that the file

mscordacwks___.dll is on your symbol path.

4) you are debugging on the same architecture as the dump file.

For example, an IA64 dump file must be debugged on an IA64

machine.

You can also run the debugger command .cordll to control the debugger's

load of mscordacwks.dll. .cordll -ve -u -l will do a verbose reload.

If that succeeds, the SOS command should work on retry.

If you are debugging a minidump, you need to make sure that your executable

path is pointing to mscorwks.dll as well.

Or this one if your local environment is running a different version of .Net:

The version of SOS does not match the version of CLR you are debugging. Please

load the matching version of SOS for the version of CLR you are debugging.

CLR Version: 4.0.30319.1008

SOS Version: 4.0.30319.18408

Failed to load data access DLL, 0x80004005

Verify that 1) you have a recent build of the debugger (6.2.14 or newer)

2) the file mscordacwks.dll that matches your version of clr.dll is

in the version directory or on the symbol path

3) or, if you are debugging a dump file, verify that the file

mscordacwks___.dll is on your symbol path.

4) you are debugging on supported cross platform architecture as

the dump file. For example, an ARM dump file must be debugged

on an X86 or an ARM machine; an AMD64 dump file must be

debugged on an AMD64 machine.

These error messages are related to the fact that WinDbg can't load your CLR or SOS files for orchestration as they need to match the environment your memory dump was taken from – easy solution, go grab these files from your server environment and try loading WinDbg again.

Wrapping Up

As you can see if you know a bit more about using WinDbg and if you aren’t afraid to have some patience, you can investigate the current state of your application at the time of the crash without it getting too scary. WinDbg is a powerful tool and it’s output is often very verbose, but if you take the time to read it’s output you can gain a lot of knowledge on your applications state at the time of a memory dump, and from this gain the breadcrumb trail you need to start your debugging investigation.

Tess Ferrandez from Microsoft has a great blog and tutorial series to learn more about WinDbg, and I highly recommend it for anyone wanting to get a little deeper.

There is also a long list of WinDbg commands and their usage here for a deeper browse.

If you’d like to give this all a try without having an app that’s broken, feel free to download my example project from Github.

Comments