When maintaining applications built in ways that make unit testing them high friction, difficult or down right impossible, we often turn to integration tests or no tests at all. Poking around the outside of your logic to see that the outcomes our code produces is often fiddly, brittle and in most cases so time consuming it just doesn’t happen. Lucky for us all, if there has been a case in the past where we’ve been unable to test part of our business logic, Visual Studio 2012 Update 2 is here to offer an answer to your prayers which you just might not be aware of.

Recently I changed jobs from working for external clients on creative driven work to running an internal development team. This has been the other side of the universe in more than one way for sure.

With this change in scenery, so changed the types of projects I’ve been working on, with a change to more brownfield work. Out with the new and in with the old… or something.

My old role consisted of about 10% legacy project and 90% new. Often the 10% brownfield projects were themselves only less than 2 years old. A common attitude this bread within my team whenever they had to go back to an older project was something along the lines of:

“…

Oh No!

You mean I have to work in .Net 3.5…!

”

Us poor Gen-Y developer equivalents…

Us poor Gen-Y developer equivalents…

My new role, and this change to supporting a number of older applications has brought with it a need to look at ways of addressing untested, untestable or black box legacy code to increase our confidence in refactoring it.

The Problem

Writing unit testable code has been an ever growing trend in .Net, but 5-6 years ago it was still growing mass acceptance in our part of the world – partially lead by Microsoft themselves; Visual Studio 2008 Pro was the first IDE to have unit test support built in outside of the “team suite”, “ultimate” or “architect” editions. This gave the impression that “Unit tests were only for the rich”.

What I’ve seen in many workplaces over the years is that this lack of unit test penetration lead to a lot of .Net developers simply not learning good practices when it comes to separation of concerns and modular coding practices that lead to testable code. This isn’t a complaint. We were all there once; just a statement that I saw the effects of this in a lot of legacy projects that all suffered from:

- Wide spread usage of static classes and methods (i.e. no interfaces, virtual methods etc).

- A lack of dependency injection (no way to easily replace functionality with a mock or a stub).

- Tightly coupled code (a domain class that talks to the database, or a business layer method that refers to HttpContext.

This lead to scenarios that meant that when developers on .Net started out unit testing, they often were forced into doing end-to-end integration tests to get around their tightly coupled architecture.

[TestMethod] public void UserSignup_ValidFormSubmission_UserIsCreated() { var testHelper = loadApplicationOverHttp(Configuration.LocalSiteUrl); testHelper.SubmitSignupDataOverHttp("username", "password"); var dbEntry = DataLayer.GetUser("username"); Assert.IsNotNull(dbEntry, "No user was created in the database"); }

From a build and automation perspective, this type of testing is expensive as it takes a long time to build and run tests. To start with you need to have a database server online just to run your tests. You often need a clean database. It needs to be kept in synch with production or a known state. When working with CI it’s harder to automate, and hell when you’re just a new developer on the team you’ve got to work hard to get your workspace into a happy place to even run these kinds of integration tests.

This lack of smooth experience only leads to one thing: less testing.

While Integration tests are useful to know that your application works end-to-end, obviously the fiddly and brittle nature of them is why Unit tests are such a preferred method of testing code path functionality across your entire codebase. But Unit Testing takes architecture choices from day 1 to make your applications easily testable, so brownfield projects caught out with a lack of good architecture with little budget for refactoring work often flounder in a unit testing no mans land.

That was until Microsoft Moles came along.

With Moles came a huge power of interception that was originally a free piece of framework/tooling from Microsoft Research that worked with Visual Studio 2010, albeit lacking some polish.

Sadly Microsoft took Moles away from us with the launch of Visual Studio 2012. In a similar turn to how they approached VS 2005 they opted to only include support for Moles in the Ultimate edition.

That was until the Update 2 for Visual Studio 2012 released last week, which if you download today has all of the moles fakes magic (they changed the name), but now with Visual Studio 2012 support and awesome IDE integration.

Microsoft Moles/Fakes

One of the greatest things about unit testing in other more dynamic languages like Ruby or Python is that testing logic is inherently easier because you can simply replace functionality at runtime as they’re dynamic languages. Mocking is almost a non-event just because of this dynamic nature.

Thanks to Fakes some of this power is yours to tap into with VS 2012 just as it was when they first released Moles in 2010.

Moles has grown into what we know now as Microsoft Fakes and is an incredibly powerful bit of kit for allowing you to replace functionality for your application at runtime. This power then allows you to intercept legacy code and make it testable.

A good example of this power is in the example below of a shim of System.DateTime.Now and its Get accessor. Microsoft Fakes allows me to test a static method or anything that depends on it with ease; in this case changing the date to 1st January 1970:

[TestMethod] public void MyDateProvider_ShouldReturnDateAsJanFirst1970() { using (Microsoft.QualityTools.Testing.Fakes.ShimsContext.Create()) { // Arrange: System.Fakes.ShimDateTime.NowGet = () => new DateTime(1970, 1, 1); //Act: var curentDate = MyDateTimeProvider.GetTheCurrentDate(); //Assert: Assert.IsTrue(curentDate == new DateTime(1970, 1, 1)); } } public class MyDateTimeProvider { public static DateTime GetTheCurrentDate() { return DateTime.Now; } }

Pretty cool!?

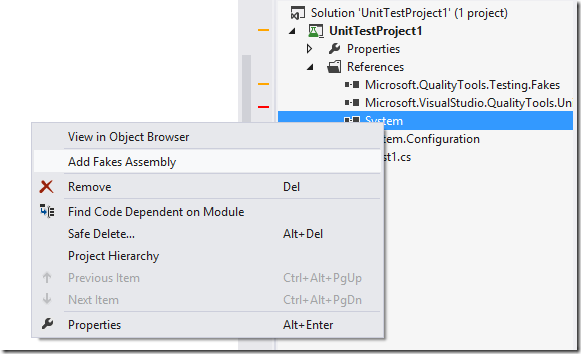

And to get this level of interception all you need to do to get this is download Visual Studio 2012 Update 2, then Right click on a Reference in your Unit Test Project and add a Fakes Assembly (a shimmed copy of your binary).

Then all you need to do is:

- Wrap your logic in a ShimsContext.

- Use the new convention based syntax “Shim[Classname]” class and “PropertyName[Get/Set]” syntax to define what your shim will do.

- Go grab a <insert tasty beverage of your choosing/>

And you’re off and away!

Stop reading – Start testing the untestable!

With the power of Microsoft Fakes offered in Visual Studio 2012 Update 2 there is now no excuse not to test that old .Net project you and your team members are afraid to refactor. Get to it.

Comments