In ASP.net land we are often lead to think the “Microsoft way” when it comes to a lot of things. Running performance tests and benchmarking is one of these tasks where we are often found looking into commercial tooling or products to help us find out how our applications handle load. Meanwhile a lot of web developers on other stacks are doing it with great free tooling. There is nothing stopping us from stealing the best parts from these stacks and bringing them back to the land of ASP.Net.

Image: Martin Heigan

Tooling tooling everywhere…

If you’re developing ASP.Net websites and web services you’ve probably turned to a few different options to performance test your pages and web services.

You may be lucky and happen to own a copy of Visual Studio Ultimate and have had a play with the Performance and Stress testing tooling that comes along with it.

In the non-microsoft tooling world I’ve used a few tools such as Load UI and Soap UI for a number of projects successfully. There are many many commercial products that do similar jobs.

But a lot of these tools sometimes actually don’t make it really clear how to get answers to some simple questions that both we and our clients often want to know.

Simple questions like:

“How many users can my application take at once?”

Or other gems that you might like to know;

“How long does my page take to execute? How long does it take when 100 visitors are using it at the same time?”

Info for nothing, and the tests for free…

When you install Apache you get a whole heap of tools along with it. We normally think of Apache as a Linux/Unix web server, but there is a windows version that contains all the same power.

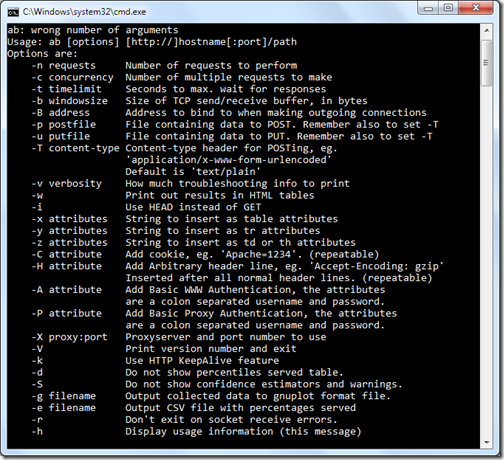

One of the included tools that comes with Apache is the performance testing tool Apache Bench.

Apache Bench is a really powerful and simple command line tool for performance testing and gathering metrics on how your application pages respond under load. It does this with very little CPU usage and a very small memory footprint, allowing you to run it on hardware that has lower spec than a lot of the commercial tools around. This makes it great if you want to use old machines on your network to run testing concurrently form different network addresses.

Apache Bench allows you to simulate a defined number of requests at a time for a set amount of total requests and view results on how your pages respond.

This allows us to do the following:

- Test a page with 10 users at a time for 1,000 requests

- Test a page with 50 users at a time for 10,000 requests

- Test a page with 100 users at a time for 100,000 requests

- etc.

- etc.

Do the above for 1 minutes, 5 minutes…

And get response times; failure rates; requests a second; transfer rates for each level of user load.

Actually get data that can help you estimate how your application will function once it goes live.

For Free.

WARNING

This is not a “this is how you should perform tests on your website to become invincible”. There are plenty of blog posts and articles out there to get you strategies an approaches on how to do this. This post is however a guide to doing basic micro-benchmarking on individual pages during development to test performance in ways that you won’t be able to do on your dev machine without other software.Performance testing and website load generators such as Apache Bench can be dangerous things in shared hosting environments and without your webhosts knowledge, so please take precautions before testing on other peoples sites or hardware.

Performance testing - Thinking it through

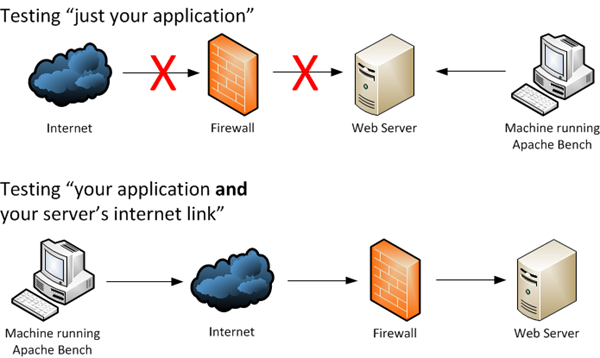

As web applications use networking bandwidth, you need to take this into account when deciding how you are going to be doing your testing.

Are you testing just your application? or are you testing your application and your server’s internet bandwidth? (and your test machine’s bandwidth…)

If you want to know how your application responds independent of the internet, you should test from a machine that is on the same internal network as your webserver to ensure that your internet pipe is not the bottleneck in testing. This will give you upper bounds on your application, but will not give you the full picture for launch day.

If you want to also test the internet link that your server is serving through (i.e. the real-world), run your tests from machines outside your server’s internal network – somewhere on the internet.

Maybe an Amazon EC2 Instance? (If so use the Linux Apache Binaries – Linux instances of EC2 are cheaper…)

I do suggest you do this with your ISP’s knowledge and only when you are running dedicated servers – otherwise you may become a willing participant in a DOS attack against your web host, and also take the other sites on your box down at the same time from the performance hit.

Making it all happen

Download the XAMPP zip file.

In the bin folder of the zip file extract the file ab.exe into a location on the machine you will be running your tests from so that you can easily get to it.

For the point of this demo I've placed mine in “C:\testing\”.

On the machine you will be testing from open a command prompt window and move into the above created directory (enter “cd C:\testing\”).

Now we will start by issuing 1,000 requests from 5 concurrent clients at the same time.

The –n is the number of requests to process (we want this to be a high enough number that the tests run for a minimum amount of time (a minute or 30 seconds should give you an idea on numbers)

The –c is the amount of concurrent requests to run. This is the equivalent of a number of people hitting your site at the same time.

ab.exe -n 1000 -c 10 -b http://mywebsite.com/mycontroller/myaction

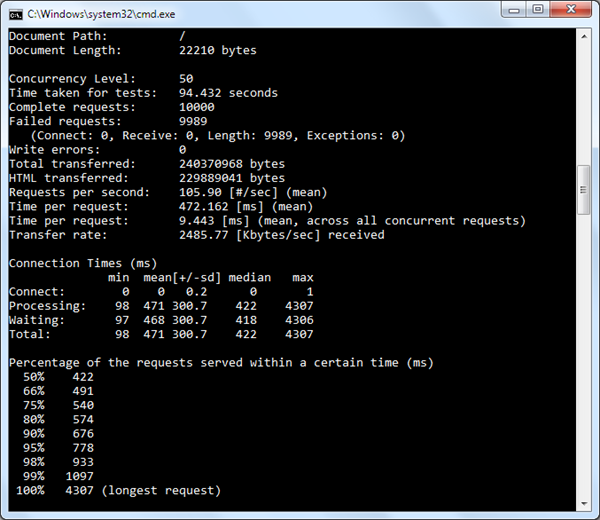

This will return a result similar to the below ( the below result was run with 10,000 requests from 50 clients against my blog home page):

As you can see from my results above my page could handle requests at a rate of 105.90 requests per second, the fastest request was 422ms, the slowest 4307ms. While I have pummelled this page - I'm making 50 requests at once over many requests, these response times are simply not what I had hoped.

My testing above was conducted with Apache Bench and I purposely made this page perform worse by turning off ASP.Net caching. While I am not testing anything but my home page this does show me that my homepage needs work. I want to get the homepage response time down to around the 100ms mark.

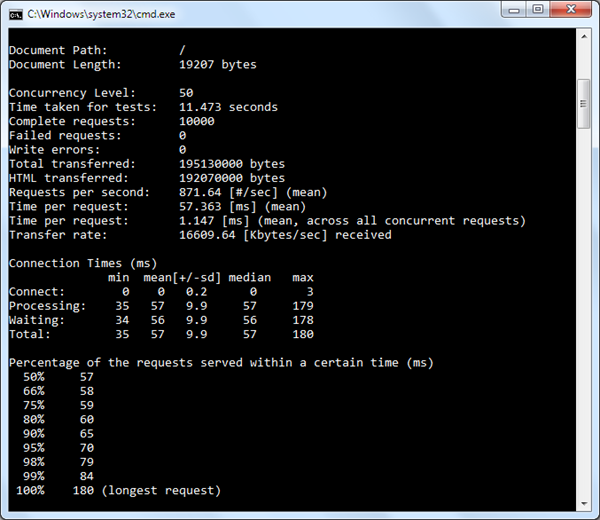

If I turn my ASP.Net MVC controller caching back on this result then becomes much better:

This is now handling requests at a rate of 871.64 requests per second, the fastest request was 57ms, the slowest 180ms. Wow – that’s a lot faster.

When testing your pages you want to start with a number of concurrent sessions where the response times and requests per second are repeatable and fast (around ~100ms), and then walk this number up until you get a feel for the peak your page can take.

So try with 20 concurrent requests

ab.exe -n 1000 -c 20 -b http://mywebsite.com/mycontroller/myaction

If this is successful while still keeping ~100ms response times try 30, and then 40 and then 50 concurrent requests (the –c 20 is the number of concurrent requests) and so on and so forth until you start to see you application degrade (degrade = have a higher response times than say 300-400 ms).

Taking it one step further

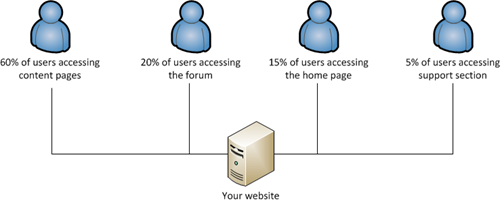

So now that you’ve micro benchmarked a single page it’s time to think about ways we can take Apache Bench further. We want to test multiple pages concurrently – maybe with differing loads (as not everyone will be surfing your site in an even spread across all your pages.

Batching requests.

When people surf your site during a busy period you will have differing loads across differing pages and your testing your reflect that.

As Apache Bench is a command line application you can easily batch multiple executions together to create this load.

As it supports command line trunking we can save results out to text files.

If I want to have say 50 users on the site at once and I look at the split of numbers I’m wanting to test above, I can easily spread this across multiple concurrent Apache Bench sessions from my test machine.

Place something similar to the below in a batch file to simulate different areas of your site being accessed at the same time.

REM 30 users accessing a content page start cmd /c ab.exe -n 1000 -c 30 http://mywebsite.com/mycontentpage > results-contentpage.txt REM 10 users accessing a forum page start cmd /c ab.exe -n 1000 -c 10 http://mywebsite.com/myforum/thread-123 > results-forumpage.txt REM 7 users accessing the homepage at once start cmd /c ab.exe -n 1000 -c 7 http://mywebsite.com/ > results-homepage.txt REM 3 users accessing the support section start cmd /c ab.exe -n 1000 -c 3 http://mywebsite.com/support/list > results-support.txt

Save this as a new batch file apache-bench-tests.bat and run it from the command line – this should spawn a number of command line sessions concurrently all running Apache Bench.

The results from each of your bench marks will be saved in the text files mentioned after the > in each batch run.

Testing from multiple machines at the same time.

If you’re wanting to really test your application in a more realistic setting you need to test everything – including your network hardware.

For this I would suggest setting up a collection of machines or virtual machines and run batch scripting on each of them with Apache Bench to simulate your site from multiple physical locations.

Testing forms and search pages by posting data.

You can test your application using more than just GET requests.

Apache Bench supports http PUT and POST and can simply take a text file with your POST or PUT data in the command execution.

ab.exe -n 1000 -c 5 http://mywebsite.com/ -p file-that-you-will-post.txt ab.exe -n 1000 -c 5 http://mywebsite.com/ -u file-that-you-will-put.txt

Summary

The above is not a scientific in depth approach to performance testing, but if it will definitely give you a better feel for how each page of your application responds under load and on the cheap.

By scripting multiple pages of your site to be hit concurrently you will get a clearer indication of how it will perform in the wild and which pages may need optimisation or caching to ensure your site can handle extra traffic concurrently so that when those spikes happen on launch day, you will either be better prepared or know how bad the situation will be – Profiling and performance work should get you the rest of the way there.

Comments