I have recently had a couple of interesting discussions with a different people on twitter and “the real world” about the use of third party build dependencies such as unit testing frameworks, database versioning tools and other command line executables in your build. The topic of these discussions has been about where these dependencies should be located, inside your project, or installed on your build server.

Wait… What are you talking about again?

When i refer to “third party build dependencies” i am talking about tools that you use as part of your build. If you have unit test projects inside your solution you want to run these unit tests using a tool such as nUnit or MsTest. If you have auditing/validation tools you want to run such as Troy’s post on using the web.config validator WCSA you want to run these as part of your build.

The question is where should these tools reside?

Should you install nUnit version x.xx.xx.x on your build server and call it using a static file path to it such as C:\program files\xxx\xxx.exe?

Or should you instead place the specific version of nUnit you’ve been using inside your solution and call it using your build’s output path?

Getting the correct answer to these questions for your project is what this post is about.

Opinion is, as Opinion does

While this topic, like a number of other eXtreme development practice discussions, is based mostly on opinion as to what the best approach is – and this is further confused by the fact that each project has different needs, and with these needs comes different tooling requirements.

My opinion on this subject is that you should always place your build dependencies inside your project when possible. Below I'll explain why.

I’ll caveat some of my answers as to how i come to the conclusions i do by adding that i work in a digital agency type environment, and we create a lot of different projects, not just one. This can sometimes lead me to different conclusions than if you were working on a single internal project for your entire career at your current company. I’ll leave your decision up to you.

It’s about not getting stuck in tooling dependency-version hell

When you install a tool on your build server, you are often locking your project to using the version installed on your build server forever. This is a tight coupling that will always need to be kept in line. Quite often when using a third party tool, there are constant changes happening to add to its functionality, make it easier to use, etc etc.

This means that if you write code that works with its functionality in version 2.2, then when version 2.3 comes out with breaking changes you have to make a decision. Do you upgrade all your projects running on your build server to work with version 2.3, or do you simply never upgrade? If you don’t upgrade, when you start a new project do you still develop for this older version of tooling because this is the version installed on your build server?

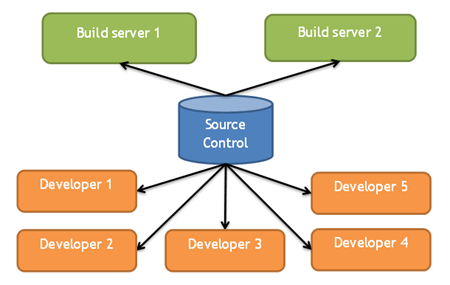

By adding your tools to your project’s source control repository you overcome this, as you can have different projects running different versions of your tool of choice. A legacy project can be happily using version 2.2, while a newer project can be running version 2.3 – this decouples your build server from a specific version of your tooling, making your whole CI process more flexible.

I recently heard Martin Fowler, one of the thought leaders on continuous integration summarise this very well:

“All a project needs to build and run should be available and versioned in its source control repository.

A new developer on a project should be able to download everything needed for a project in one command, and run it with another. This includes third party tools.”

Martin took this even further by saying that in some scenarios where you are using open source IDEs and editors, that these too should be kept in a projects’ source control to allow for a new developer to start work on a project more readily. As i work in the Microsoft .Net development space, and use the development IDE Visual Studio this is not easily possible, and even if it was you probably wouldn’t want to – anyone who runs visual studio will, if you ask them to add visual studio to your source control, give you a strange look.

I’m not so interested in this all out approach as i am only concentrating on the continuous integration/continuous delivery/build server side to this statement.

It’s about not being locked down to a single build server

When you have to run your build dependency of choice from an “installed” instance on your build server, you are making a number assumptions.

- You have admin access to your build server, and can make upgrades to your software when required without having to hassle your sysadmin.

- If your build server dies, or you want to migrate it to a new server (maybe you want to move your build server offsite to a service such as an Amazon EC2) you’ll have to setup your tool again, and configure it exactly the way you had it before (in the first scenario, i hope you backed up those configuration files).

- If you want to scale your build onto multiple servers, you’ll have to do the same as the above point as well – this means if you are running a number of build servers, you have to make sure that all of their configurations are identical.

When you first setup your continuous integration configuration, choose which build server software you’re going to run, set it up, get all excited by the magic that you’ve stumbled upon, you often don’t ever think you’ll ever need a second build server. You often don’t think you might want to run tasks that might take a long time, like web performance tests, or automated user testing like selenium – tasks that are usually run in parallel to your other build or deployment tasks.

By placing your tooling inside your source control, you are not limited by how many build servers you run or whether they are internal or external. And when you need to scale out your build configuration, or rebuild one of your build servers, you don’t need to configure a thing – just point your build server at your source control, and the rest will run without any setup.

It’s about being able to debug the build locally on any developers machine

Taking the above one step further, build configurations shouldn’t be run just on build servers. It should be just as easy for any developer to download the project and run all the build tasks locally on his or her development machine. He/she should be able to use the same version of your unit test runner that your build server is using. The same database versioning tool that your build server is using. The same widget packager that your build server is using– and the only way to guarantee this is if the tooling and its associated configuration is stored centrally in your source control.

Anyone who’s worked with build automation and continuous integration will tell you that you need to be able to debug your build locally to avoid any bang-head-on-wall debugging sessions.

How can you troubleshoot a broken build process if you can’t repeatedly run it locally in exactly the same configuration as on your build server?

By placing all of your tooling inside your source control this becomes easy – if you are having an issue with a build configuration, download the project and try and run it locally.

The “Licensing cost” argument, or “You’re using the wrong tool”

When i state the above, a number of people will come back and mention that their tool of choice is quite expensive, and purchasing a license for each project is simply not possible. I believe this argument, in most cases, to be quite flawed.

When setting up a project, continuous integration should be a part of whatever initial project architecture and project setup you go with. As i stated while discussing some of the things spoken about at a recent event on continuous integration i attended, continuous integration is the gift that keeps on giving to your daily development processes, so much so that it should be a requirement from the beginning of your project. This means you weigh project tooling heavily towards it’s need to be encapsulated inside your project – when looking for tooling this should be a requirement.

If you are using a tool that needs you to purchase a new license for each project instance in use, try and find a new tool.

If you are using a tool that needs you to purchase a new license for each build server instance in use, try and find a new tool.

If you are using a tool that doesn’t allow being run without being “installed” along with a million registry keys instead of a directory of files, try and find a new tool.

If you are using a tool that can’t take configuration options using command line arguments or configuration files for flexible configuration, try and find a new tool.

Third party tool developers are usually not evil monolithic software houses. They are building software for you and I to use based on our needs. If the developer of your tool of choice doesn’t allow for some of the above – let them know that you wish their tool had this functionality (hello, Redgate???). If they don’t agree with you, your course should be clear, find another product. It’s that simple.

In most instances, the other product is open source and FREE, making your decision even easier.

The only time this is not possible, is where a tool you use in your build is one of a kind and there is no alternative. Sometimes this means you haven’t looked hard enough, other times it means you’ll have to compromise, but if this is the case – still let the developer of your tool know. You may find that the next version or licensing agreement of your tool of choice has some new functionality/easier licesning that’s right up your alley!

With a grain of salt

Above i make some pretty finite calls regarding my view on the subject at hand – you might not agree with all of them.

I’d like to think that in every case that you don’t agree with me, this is because you have a good reason not too that is based on the current project you are working on, and not your overall view on “best practices”. Every project is different and the decisions we make to use every tool we do is based on us weighing up the pay-off the tool delivers to our project.

You may use a tool that is so oober awesome that using an alternative is simply not worth your time.

You may only ever need to have one build server – and you are the only developer on the project and this build server is your local machine so you don’t care about having to buy a license for every server your tool gets used on.

At the end of the day, how you come to your decisions is none of my business – but while making these decisions to use tooling that has a requirement to not be saved in your source control, i would hope you do so knowing what limitations this delivers to your project – hopefully the benefits of your decision outweigh the possible negatives I've mentioned in this post.

Summary

So basically what i am trying to say here is, you should always place your build dependencies inside your solution unless absolutely necessary because of licensing or some other un-moveable obstacle. Your choice of tooling should strongly be guided towards being able to be run from your source control repository. If your tool of choice doesn’t support this approach to being used, you can usually find an alternative, so don’t get locked down – put your build dependencies in your source control.

Comments